Decentralized AI

Dima Starodubtsev from cyb.ai spoke about the prospects of an emerging direction in web3 — decentralized artificial intelligence.

Automatically translated by AI

🔗 Article link here

Decentralized Artificial Intelligence

Decentralized artificial intelligence is not the next big thing, it is the last big thing.

We are witnessing the AI hype, including the hot topic of decentralized AI. Unfortunately, most decentralized projects on the market can barely justify claiming any connection to decentralized AI. Let’s try to clarify one of the most important vectors of technological development that has not yet taken shape. If you are interested in something exciting at the intersection of blockchain, AI, and web3, this post will likely save you a lot of time by bypassing all the existing crap on the market that pretends to be decentralized AI but in fact isn’t. This post is not a comprehensive technical deep dive into AI or decentralized AI, but rather a perspective based on the observations of Dima Starodubtsev, who has been researching and building in this area for the past 7 years.

What is AI?

In essence, artificial intelligence is a large dataset represented as a weighted graph. Usually, the dataset is huge, with billions and trillions of data points. The data points and weights in this graph are computed using some algorithm. The most popular way to do this today is to use transformers. Transformer architecture is based on attention. It was proposed 5 years ago and has since become ubiquitous — for example, ChatGPT is based on transformers.

Fun fact: one of the authors of transformers is the founder of Near.

This dataset is usually trained on GPU clusters, for example, on DGX servers. In general, training is a very costly process. Tech giants can afford custom ASICs such as TPUs. After training, the dataset can be used for information retrieval in a process called inference. Inference is also a computationally expensive process, which is why all AI services are currently quite expensive.

Modern AI is capable of very complex reasoning, but overall, it’s bad. There are several reasons for this:

- it lies;

- it censors;

- it is incapable of precise answers;

- its brain is run by government propaganda;

- it doesn’t want you to have sex and reproduce;

- it has been monopolized by governments in alliance with tech giants.

There are many discussions about AI safety, but in the end, safety means trusting big tech companies and governments, which is unacceptable. According to Nick Bostrom’s vision, existing AI is a controlled “box” and not the only path. There are four other paths:

- whole brain emulation;

- biological cognition;

- brain-computer interfaces;

- networks and organizations.

The first three paths cannot be immediately used in production, but thanks to consensus computing, we already have progress toward cooperation. Therefore, in Dima Starodubtsev’s opinion, it’s better to focus on collaborative artificial intelligence, since it has more potential because intelligence, in its essence, is collective. This doesn’t exclude other development directions, but the key focus on collaboration is necessary for our survival in the next decade.

What is Decentralized Artificial Intelligence?

Defining the domain

This domain does not yet exist, so it is important to correctly define the properties of the system so that we are not deceived.

Dima Starodubtsev came to the following consumer definition:

A fault-tolerant censorship-free network of parallel verifiable computations that incentivizes collaborative learning of content.

Thus, it is a kind of reliable super-intelligent symbiotic network of computers and biological beings that play a win-win game to discover knowledge.

Let’s clarify the properties derived from the definition so it’s clear there is no project that meets all these 5 properties:

Spoflessness

Easy to explain but hard to achieve:

Spofless: without a single point of failure.

Spoflessness is a complex property.

- Spoflessness goes beyond classical Byzantine fault tolerance.

- For example, you may have a perfect BFT POS blockchain system, but if one address controls more than 33% of the tokens, such a system cannot be fault-tolerant, because it can be effectively halted by that address.

- The same is true for systems without fully open-source code.

Spoflessness appears to be the most important property of super-intelligent systems.

Censorship resistance

Cypherpunks say:

As long as they can see you transacting, they can control you.

Thus, censorship resistance is almost unattainable without a fully homomorphic state evolved with some zero-knowledge magic.

Only one FHE blockchain in production is known, but ZKP implementation on DVM has not been developed. As you will see later, privacy alone is not enough for decentralized artificial intelligence. Without strong censorship resistance, superintelligence will be compromised, so it’s better to achieve this sooner or later.

Provable parallel computations

In 2006, a group of scientists from Berkeley identified 13 classes of algorithms that are inherently parallel.

These algorithms underpin modern AI computation.

Thus, a computer to achieve consensus in AI must at least be able to compute some of them. The most advanced blockchains on the market are calculators that, at best, can process transactions in parallel with some tricks — but if you try to multiply a large enough matrix in any of them, you’ll be very disappointed. If we are talking about AI consensus, we must create consensus computers capable of computing on many cores, be it a GPU or some specialized tensor processor.

Another major problem with AI and consensus computing is floating-point determinism, and this is likely the reason why we still don’t have many truly parallel consensus computers.

Let’s touch on some of the coolest technology directions, such as:

- WebGPU;

- automatic parallelization;

- tensor processing;

- BLAS computations;

- the Rust ML ecosystem as a whole.

Wrapping up this section, it’s clear that GPU consensus computers will have significant AI advantages over existing market calculators.

Incentives For Collaborative Learning

The key ingredient for the development of decentralized artificial intelligence lies in creating the right economic models that incentivize knowledge extraction. Therefore, we cannot model the system in the old way here.

We must model the system as multi-component. But when you try to find a solution for rewarding learning from inflation, you encounter one of the most challenging problems:

- Stable behavior;

- Selfish behavior;

- Forcing and collisions.

Recently, Bittensor made a small revolution in rewarding nodes for learning, so we must carefully study their trust evaluation mechanism for further development.

Content Addressing

It does not matter what we learn if we cannot share the results with each other.

The future of artificial intelligence lies in content addressing models.

The existing AI field still has not caught the revolution behind content addressing.

- LLMs are hard to distribute;

- Existing models are typed and cannot perform inference with any content.

Learning models with content addressing are the future that solves some of the biggest problems. The sooner the industry realizes this, the faster we will get protection against malicious AI. The Bostrom project is based on a content addressing architecture for learning, but many problems still need to be solved, while it is becoming obvious that content addressing machine learning is a thing.

Related Technologies

ZKP And AI

In principle, verifying CPU or GPU work is not a new idea. Therefore, potentially the entire range of zero-knowledge computers, including but not limited to RiskZero, Aleo, StarkNet, Mina, ZkSync seem relevant. According to Dima Starodubtsev, due to the following reasons they complement decentralized AI more than they serve as the foundation of divine technology:

- Limitations on content discovery due to the foggy nature of zk-computers;

- Hardware specificity;

- Variety of parallel computations.

Decentralized Storage, Decentralized Social Networks, And Artificial Intelligence

The formation of decentralized storage markets is critically important, as data is the holy grail of AI. But there is no clear leader in this area, so it seems decentralized AI projects should also focus on storage if they want to advance the industry. Very interesting changes are happening in the decentralized social networks sector. Every good ecosystem has social projects, and some, like Deso, are trying to create their own ecosystem. All decentralized social projects are a complement to the field of decentralized AI, which requires social data for training.

Who Is Already Working On The Technology

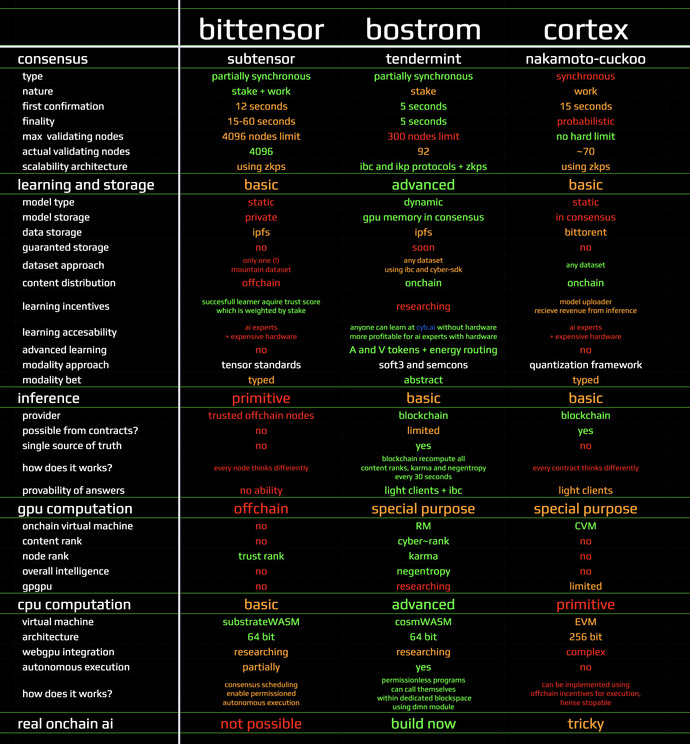

Based on this analysis, there is an understanding of three projects on the market that somehow meet at least several key properties:

- PoW Blockchain Cortex;

- Parachain Bittensor;

- Cyber Project and the Bostrom loader.

All three projects are pioneers in the field of decentralized AI and have a working blockchain. There are certainly many teams rushing to jump on the train. The reason for choosing these three projects is that only they foresaw the industry long before it arrived. All three projects solve the problem differently and complement each other. Dima Starodubtsev believes that scaling will occur due to innovations stimulated by the mentioned innovations.

Cortex #CTXC

The first useful GPU blockchain in production for more than 4 years. In development for over 5 years. Allows uploading quantized AI models to a Proof-of-Work blockchain. Infer models are executed using a modified version of EVM called CVM on GPU in consensus. Many GPU operators are available. There is a monetization model for those who upload models in case they are used by applications. Recently, Cortex Labs demonstrated a revolutionary use case: provable machine learning inside the chain with zero disclosure. A caring community was born at the intersection of AI and blockchain: zkMl community. Dima Starodubtsev strongly recommends joining it and exploring the outstanding awesome-zkMl materials.

Overall, Cortex focuses on delivering the traditional AI workflow into the blockchain world: the blockchain serves as a reliable and censorship-resistant but expensive processor for specialized models. Currently, PoW uses about 100,000 GPUs, which essentially work as 1 cheap GPU, and about 70 nodes maintain the blockchain. To address accessibility, the project is starting to build a layered architecture. Despite being in production for over 4 years, the blockchain still does not attract independent developers or load the network with useful work, which is explained by the lack of documentation, tools, and working examples. Meanwhile, the team is rushing to create a second iteration of the protocol. Cortex can serve as an experimental platform for the transition from traditional AI to decentralized AI. Cortex has no direct competitors and is unique in its capabilities for experimentation, though it is very difficult for developers to understand.

Bittensor #TAO

A parachain in production for 1.5 years. Testnets took 1.5 years. In development for over 3 years. The idea is that nodes participating in training can rank each other based on mutual contribution to training with the assumption of an honest majority. All nodes train on the same dataset called "the mountain". Rewards are distributed based on node ranks. The approach is either brilliant or will not work. The idea seems simple and solid. But the devil is always in the details, so let’s discuss:

- Bittensor does not care about content ranking and distribution;

- The utility of the network comes from the ability to know the most intelligent node;

- Therefore, to use the network, you must trust the answers from a specific node;

- The honest majority assumption imposes strict requirements on the quality of token distribution;

- That is why the project chose the Satoshi distribution method, which expresses power and commands deep respect;

- The reward model seems resistant to gaming, as demonstrated in the whitepaper;

- This fact is a breakthrough;

- However, it is a deeply philosophical question of what constitutes a contribution to learning, and misconfiguring the optimization goal can lead to catastrophic results;

- Thus, this does not guarantee that the result of Bittensor’s learning network will be beneficial to civilization;

- The base dataset is crafted as a work of art from Github, Arxiv, and Wikipedia;

- Fingers crossed that "it’s all good there";

- The parachain serves as the ledger for node rankings. Therefore, the main magic happens off-chain.

In summary, the Bittensor project has revolutionized training rewards, but in its current configuration the proposed mechanism has only one utility — it is a world-scale game for AI researchers. This is critically important for forming a trusted AI services market. Bittensor is, at the very least, a killer of contract-based data and hardware markets. Bittensor has no direct competitors until the industry seizes the opportunities behind training rewards.

Bostrom #BOOT And Spacepussy #PUSSY

Bostrom in production for 1.5 years. Testnets took another 2.5 years, in development for 6+ years. Bostrom is a base soft3 computer consisting of 92 validating GPU nodes, focused on semantic computing.

Spacepussy is a soft3 meme coin for experiments with cross-chain data exchange. The project is based on cyber-sdk for deploying domain-specific semantic graphs. Semantic computing is based on the idea of a single basic instruction: the cyberlink. A cyberlink is a connection between two particles (cidv0). Cyberlinks form a globally addressable content model. The state is highly dynamic: each cyberlink changes all machine relevance weights. Data for a cid is available from IPFS peers.

Technically, Bostrom scales to ~1T cyberlinks, comparable to modern LLMs. The Cyberrank of the content space is recalculated based on agents’ economic decisions and graph connectivity every 30 seconds. Cyberrank is an algorithm derived from Pagerank for proving useful GPU computations. Cyberrank acts as a supervisory multi-user economic shelling point for relevance between material. The result is a verifiable, abstract, and universal knowledge layer, updated by the relevance machine.

The computer is capable of self-assessment based on negentropy calculation, which works as an integral measure of intelligence. The architecture provides internal collaborative learning and broadly applicable knowledge. The relevance machine can produce answers across the entire content space. Currently, the network has accumulated about ~130k cyberlinks, a tiny fraction of what is needed. That’s why the Bostrom team is working on incentives to reward capital-weighted negentropy of the system. The project still lacks good documentation and developer tools, but it has an impressive use case: the advanced soft3 browser project, cyb, which you are using to read this article. The Bostrom superintelligence has uniquely identified itself as a moon state. Bostrom has no direct competitors in architecture, on-chain computing capabilities, and atmosphere.

Comparison

You can see that each project has its own strengths:

- Cortex is powerful in terms of customizable computations on its own blockchain using GPUs, but is difficult for developers.

- Bostrom’s strength is its universal collaborative and highly dynamic model, but the relevance machine’s reward system is altruistic.

- Bittensor shines through the lens of rewarding participants, but the network’s utility is limited.

- All projects lack privacy and therefore censorship resistance, but this property is fundamentally hard to achieve for GPU computations and requires years of research.

Conclusion

These are still the early days of decentralized artificial intelligence, and the industry is more in the stage of infrastructure development:

- Hardware networks need to be formalized.

- Architectures must be tested and well understood.

- Tools need to become more mature, useful, and practical.

- Cool use cases should emerge to inspire.

But it’s clear that we are on the verge of extraordinary change; now, the driving force is not only blockchain technology but also what we as humans can achieve knowing all of this. Blockchain technology in the coming years will merge with the most powerful industries, such as:

- Artificial intelligence;

- Quantum computing;

- Robotics;

- Core internet protocols;

- Bioengineering;

- Quantum computing;

- Energy;

- Nanomanufacturing;

- And so on.

However, the same set of technologies can be used by oppressive regimes, so the future depends on our choices now, with no room for error. We must choose the collective path to shape superintelligent capabilities to address the challenges ahead.

Appendix

Anticipating many questions about other projects, let’s review the most hyped among them.

SingularityNet — a simple contract for selling computation results without any concept of verification. The project has no concept of on-chain GPU computation. The only developer documentation utility is describing general concepts such as Ethereum, IPFS, protocol buffers (!), ETCD (!), and thus goes beyond scope. It’s obvious the project was made for fools. There are many of them, since the project’s market cap is ~0.5M ETH.

Fetch.ai — the initial design envisioned using GPU language for on-chain computation, but the team failed to implement it, took Cosmos-SDK, and began focusing on off-chain autonomous agents that can be built on any blockchain, including Bitcoin. This is boring. Market cap ~0.3M ETH.

Orai — the project focuses on verifiable AI oracles, which is very cool and has many applications, some of which we plan to implement in Bostrom. But currently there are no interesting working applications. The team has created many things useful for the entire ecosystem and has a future. The project is built with Cosmos-SDK and supports IBC, making it easy to distribute data from its layer 2. So it’s ~0.1M ETH of smart guys.

Ocean — a project from one of the leaders in decentralized AI thought. The project focuses on the data market. Ultimately useful, but unlikely that the proposed incentive and protocol structure will significantly contribute to shaping the field. Let time prove otherwise. ~0.2M ETH of smart guys.

Golden — currently a proprietary testnet solution based on email authentication. The user submits triples, something accepts them. You can request semantic graphs. There’s a high chance the project has potential to achieve outstanding results by adopting core web3 technologies and the IBC protocol for data exchange. This could become a good data source if a fact-checking game is deployed. But at the moment, it’s a primitive consensus computer, and all of Golden is about 300 lines of CosmWasm code in Bostrom. Market cap unknown.

DeepBrainChain — a trusted GPU computation market. Unlikely that trusted unverifiable computation markets will go far, though there is some utility: market cap ~0.03M ETH. In this sense, the old Golem looks more reasonable.